The Apple Vision Pro features an M2 chip, a ton of sensors and a new R1 chip

Apple unveiled its augmented reality headset, the Apple Vision Pro, at WWDC 2023. After showing the potential use cases for the device, the company said a bit more about the technical specifications.

From the outside, the Apple Vision Pro looks a bit like ski goggles. On the front of the device, Apple is using three-dimensionally formed laminated glass. The glass flows into the frame, a bit like the Apple Watch.

There’s a digital crown on the top of the device that you can use for adjustments. The main body of the device is made of a custom aluminum alloy with some holes for ventilation. There are a ton of chips, sensors and displays in the device.

The rest of the device is a modular system. You can swap the part that rests on your face so that it rests well on your face whether you have a big head or a small head.

At the back of the device, there is a flexible headband that attaches magnetically so that it can also be easily swapped. It looks a bit like the Alpine Loop on the Apple Watch Ultra.

On the side of the device, there are two speakers next to your ears that deliver spatial audio. Apple has developed a technology called audio raytracing for spatial audio.

Image Credits: Apple

Inside the headset, Apple has partnered with Zeiss for glasses that magnetically attach to the lenses, with vision correction if needed.

When it comes to the main system on a chip, Apple uses its own Apple M2 chip. The display system uses micro OLED so that Apple can fit 44 pixels in the space of an iPhone pixel. Of course, those pixels will be much closer to your face, so that will be an important factor as well.

Each pixel is 7.5-micron wide and there are 23 million pixels across two panels that are the size of a postage stamp. For reference, a 4K TV features a bit more than 8 million pixels.

Apple promises video that can be rendered at “true 4K resolution with wide colors.” And text is supposed to “look super sharp from any angle.” We will have to check that if we can get some hands-on time with the Apple Vision Pro.

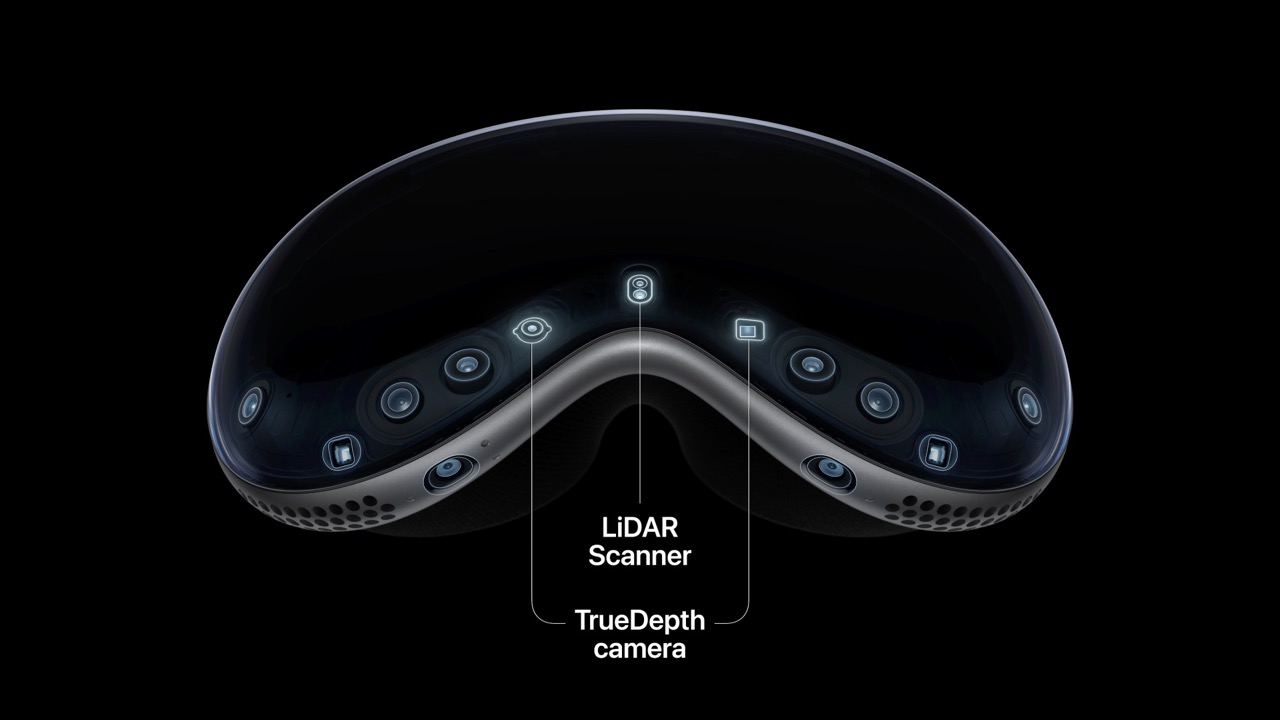

Overall, there are 12 cameras, five sensors and six microphones. On the outside, there are two cameras pointing at the real world, and two cameras pointing downward to track your hands. There’s a lidar scanner and a TrueDepth camera.

Inside the device, there are two IR cameras and a ring of LEDs to track your eyes. With this eye-tracking technology, Apple can display your eyes on the outside of the device thanks to a 3D display that sort of makes the device look transparent. Apple calls this feature EyeSight.

There’s also a new R1 chip that has been specifically designed for real-time processing of the real world. It is also supposed to reduce motion sickness.

Finally, yes, the device works with an external battery pack that you can put in your pocket, for instance. It looks like a smooth rectangular pebble. It uses a proprietary connector that attaches to the side of the headset. Apple promises two hours of battery life, but you can also use the device when plugged in.

The company expects to sell the Apple Vision Pro for $3,499 and it will be available for purchase in early 2024.

Image Credits: Apple

The Apple Vision Pro features an M2 chip, a ton of sensors and a new R1 chip by Romain Dillet originally published on TechCrunch