Discord is testing parental controls that allow for monitoring of friends and servers

New usernames aren’t the only change coming to the popular chat app Discord, now used by 150 million people every month. The company is also testing a suite of parental controls that would allow for increased oversight of Discord’s youngest users, TechCrunch has learned and Discord confirmed. In a live test running in Discord’s iOS app in the U.S., the company introduced a new “Family Center” feature, where parents will be able to configure tools that allow them to see the names and avatars of their teen’s recently added friends, the servers the teen has joined or participated in, and the names and avatars of users they’ve directly messaged or engaged with in group chats.

However, Discord clarifies in an informational screen, parents will not be able to view the content of their teen’s messages or calls in order to respect their privacy.

This approach, which toes a fine line between the need for parental oversight and a minor’s right to privacy, is similar to how Snapchat implemented parental controls in its app last year. Like Discord’s system, Snapchat only allows parents insights into who their teen is talking to and friending, not what they’ve typed or the media they’ve shared.

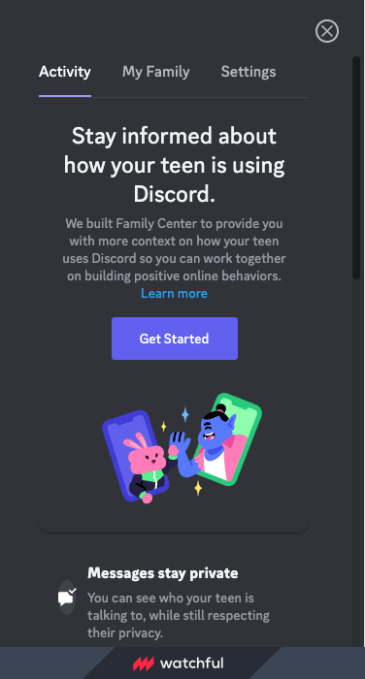

Users who are part of the Discord test will see the new Family Center hub linked under the app’s User Settings section, below the Privacy & Safety and Profiles sections. From here, parents are able to read an overview of the Family Center features and click a button to “Get Started” when they’re ready to set things up.

Image Credits: Discord screenshot via Watchful.ai

Discord explains on this screen that it “built Family Center to provide you with more content on how your teen uses Discord so you can work together on building positive online behaviors.” It then details the various parental controls, which will allow them to see who their teen is chatting with and friending, and which servers they join and participate in.

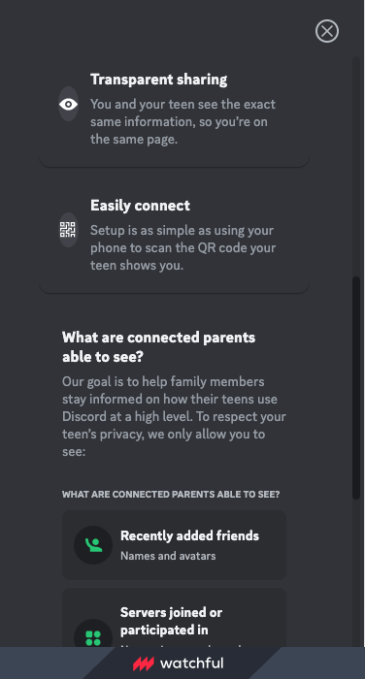

Similar to TikTok, parents can scan a QR code provided by the teen to put the account under their supervision.

Image Credits: Discord screenshot via Watchful.ai

The screenshots were discovered by app intelligence firm Watchful.ai. In addition, a handful of users had posted their own screenshots on Twitter when they encountered the new experience earlier this year, or had simply remarked on the feature when coming across it in the app.

We reached Discord for comment on the tests, showing them some screenshots from the test. The company confirmed the development but didn’t offer a firm commitment as to when or if the parent control feature would actually roll out.

“We’re always working to improve our platform and keep users safe, particularly our younger users,” a Discord spokesperson said. “We’ll let you know if and when something comes of this work,” they added.

The company declined to answer our questions about the reach of the tests, or whether it planned to offer the tools outside the U.S., among other things.

Image Credits: Discord screenshot via Watchful.ai

Though Discord today is regularly used by a younger, Gen Z crowd, thanks to its roots in being a home for gamers, it’s often left out of the larger conversation around the harms to teens caused by social media use. Meanwhile, as execs from Facebook, Twitter, Instagram, Snap, YouTube, and TikTok have had to testify before Congress on this topic, Discord has been able to sit on the sidelines.

Hoping to get ahead of expected regulations, most major social media companies have since rolled out parental control features for their apps, if they didn’t already offer such tools. YouTube and Instagram announced plans for parental controls in 2021, and Instagram finally launched them in 2022 with other Meta apps to follow. Snapchat also rolled out parental controls in 2022. And TikTok, which already had parental controls before the Congressional inquiries began, has been beefing them up in recent months.

But with the lack of regulation at the federal level, several U.S. states have begun their own laws around social media use, including new restrictions on social media apps in states like Utah and Montana, as well as broader legislation to protect minors, like California’s Age Appropriate Design Code Act which goes into effect next year.

Discord, so far, has flown under the radar, despite the warnings from child safety experts, law enforcement, and the media about the dangers the app poses to minors, amid reports that groomers and sexual predators have been using the service to target children. The non-profit organization, the National Center on Sexual Exploitation, even added Discord to its “Dirty Dozen List” over its failures to “adequately address the sexually exploitative content and activity on its platform,” it says.

The organization specifically calls out Discord’s lack of meaningful age verification technology, insufficient moderation, and inadequate safety settings.

Today, Discord offers its users access to an online safety center that guides users and parents on how to manage a safe Discord account or server, but it doesn’t go so far as to actually provide parents with tools to monitor their child’s use of the service or block them from joining servers or communicating with unknown persons. The new parental controls won’t address the latter two concerns, however, but they are at least an acknowledgment that some sort of parental controls are needed.

This is a shift from Discord’s earlier position on the matter, as the company told The Wall Street Journal in early 2021 its philosophy was to put users first, not their parents, and said it wasn’t planning on adding such a feature.

Discord is testing parental controls that allow for monitoring of friends and servers by Sarah Perez originally published on TechCrunch